Level: Big project (Bachelor's thesis)

GitHub Repository: https://github.com/JaumeAlbardaner/mppi_trainer

Why an "MPPI Trainer"?

During my stay at the Autonomous Robotics and Perception Group (ARPG) at the University of Colorado Boulder, I implemented the MPPI algorithm in a NinjaCar platform. As the MPPI controller simulates control inputs into the future in order to choose the best command to apply, it needs to know how the car will react to different inputs. This has been implemented in two ways: by setting a series of functions (base functions) or by relying on a neural network.For the implementation of MPPI I preferred relying on the neural network. Although I eventually managed to make the system work with both systems, the base functions initially broke the controller at what seemed random. As the Neural Network seemed more robust, I pursued its training in order to adapt it to the dynamic model of our NinjaCar.

Previous work:

In the official GitHub repo of the Autorally platform, a series of neural network models are provided:

https://github.com/AutoRally/autorally/tree/melodic-devel/autorally_control/src/path_integral/params/models

Nevertheless, the description that is given of the neural network model is not enough to allow others to adapt the neural network to a different platform.

Thereafter, a search was conducted to find whether someone else had approached this problem. This search returned a repository where someone worked

on this exact algorithm:

https://github.com/rdesc/autorally

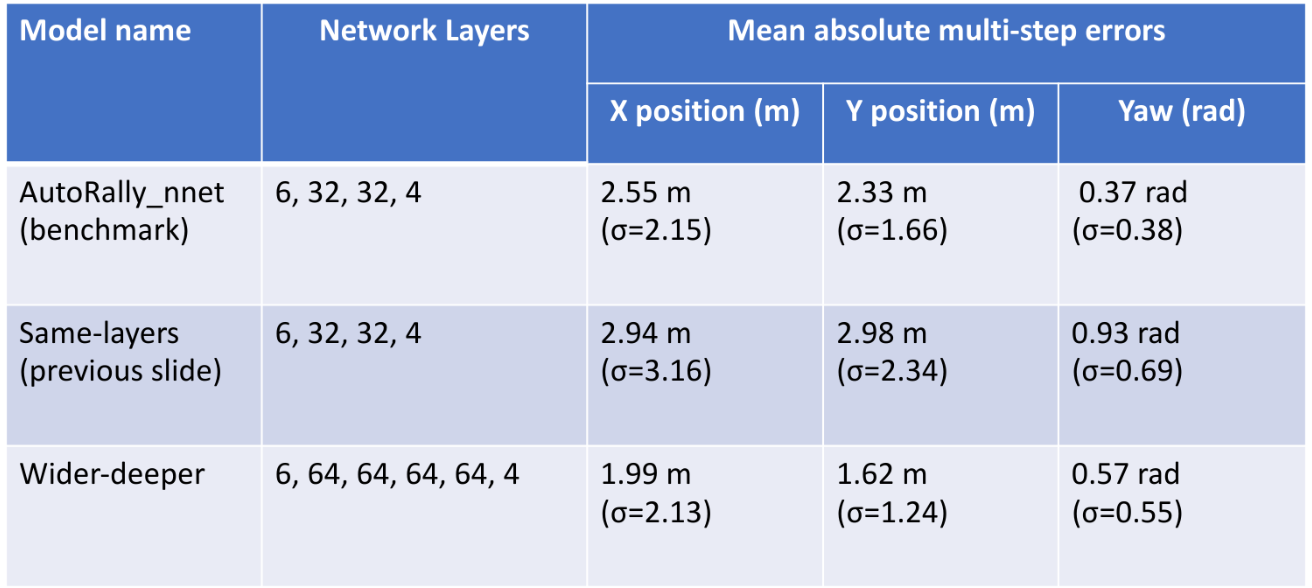

Although the author only worked on the Gazebo simulator, the results seemed too good to ignore:

Although the announced results are great, when the newly trained neural network was input to the MPPI algorithm, the simulation would display the vehicle moving in a phisically impossible manner.

Custom trainer:

Seeing how the car moved sideways made me think that there was either an issue with the z axis (as described in the

output section of the MPPI trainer repository) or with

what the inputs and outputs of the neural network had to be.

After messing with the z axis for a while I realized that the main issue had to be the training of the neural network.

Initial info:

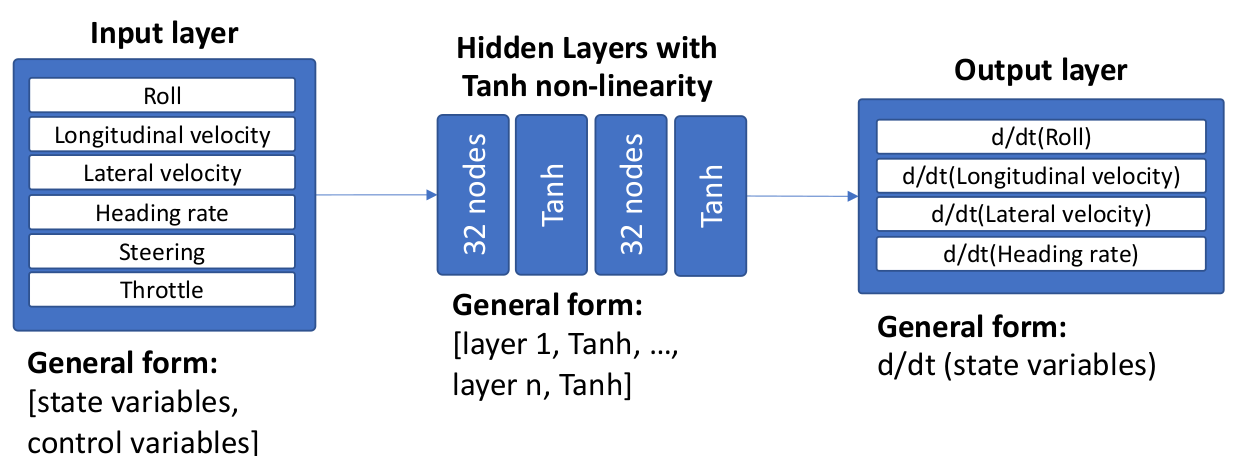

According to the Autorally repo: "All of the models take as input 4 (roll, longitudenal velocity, lateral velocity, heading rate)

state variables and the commanded steering and throttle, and they output the time derivative of the state variables". However, there is no

information regarding the order in which this information must be fed into the neural network.

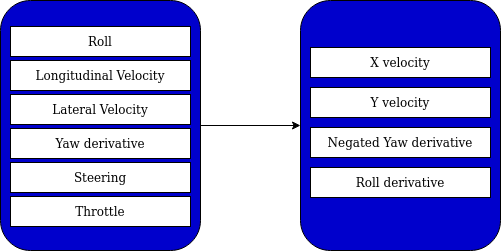

The trainer that I had initially used had the following structure set for inputs and outputs:

Actual data:

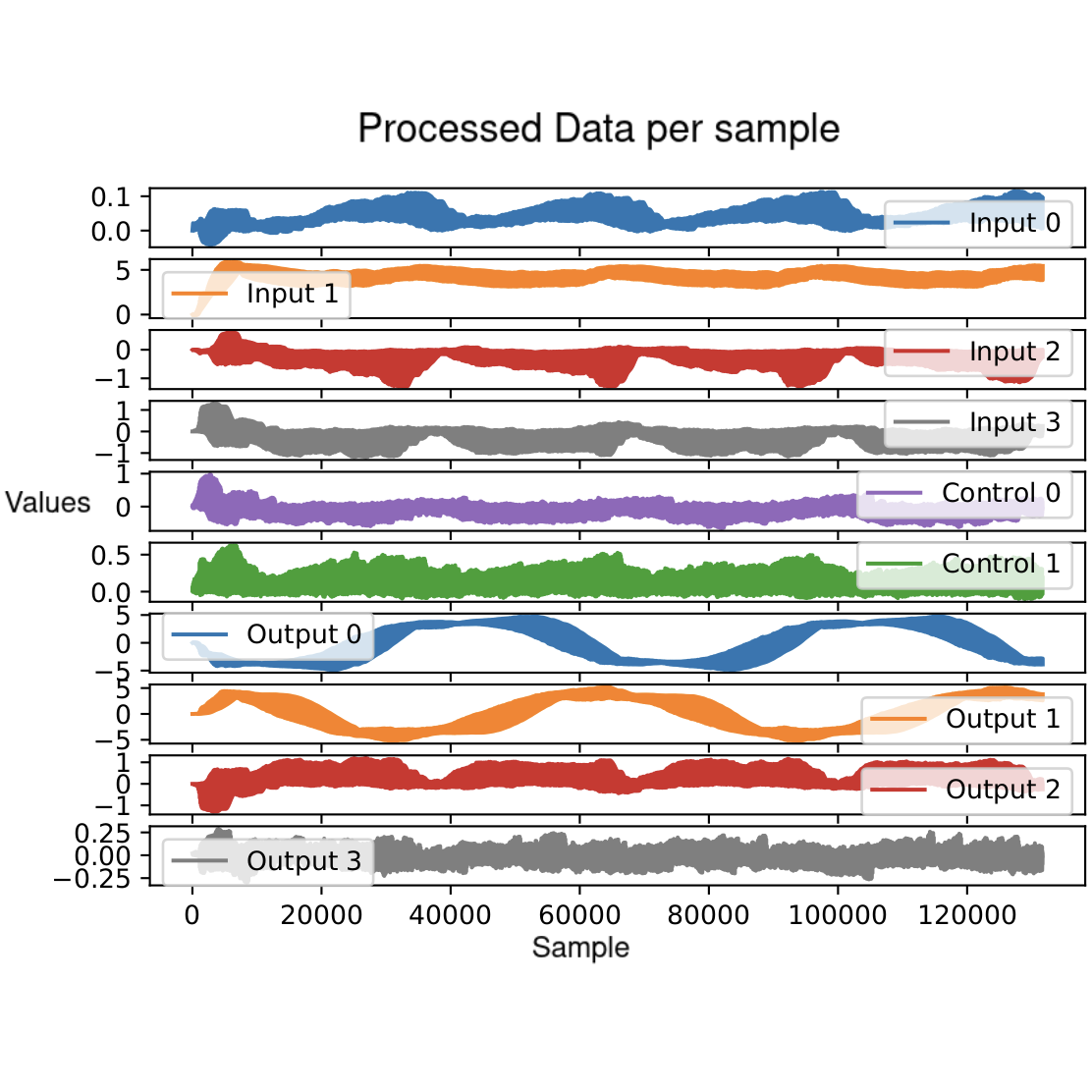

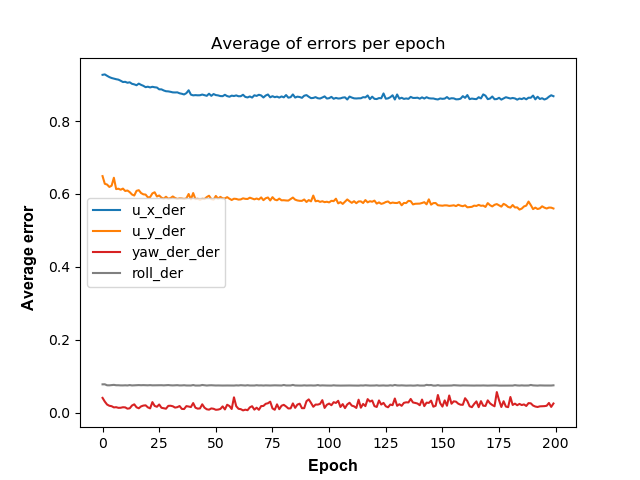

In order to verify whether the previous structure was correct or not, I ran the simulation and printed the data that was being input (4 state + 2 control) into the neural network, and what data was returned (4 outputs). This yielded the following graph:

Resulting structure:

After analysing the code whilst checking the graph, it was deduced that the neural network relied on the following structure:

As it can be seen, the structure that was obtained is much different than the one that was used in the previous repo. Each of these variables have been deducted in the following manner:

-

NN inputs (in NinjaCar frame)

- Input0: The variable takes only values between 0 and 0.1, and it’s always a positive value. Thereafter it’s a good supposition that this variable stands for the Roll (rotation along the longitudinal axis of the car).

- Input1: The variable takes a value that seems to be nearing 6, but never reaches it. It should be the longitudinal velocity.

- Input2: The variable takes a value that seems to be 0 when the longitudinal velocity is near its maximum value, it only increases from then onward, which suggests that’s when the vehicle enters the curve (and drifts increasingly more). It should be the lateral velocity.

- Input3: This variable should be the yaw derivative, as it is known to be part of the inputs but had not appeared yet.

- Control0: By limiting the steering, this variable changed its values. So this is the Steering.

- Control1: By limiting the throttle, this variable changed its values. So this is the Throttle.

- Output0: Although this was not mentioned anywhere else but inside the code, this variable corresponds to a projection of the velocity of the vehicle onto the world’s frame X axis.

- Output1: Following same principle, this variable corresponds to the vehicle’s velocity projected onto the world’s frame Y axis.

- Output2: According to the information provided within the code, this variable corre sponds to a negated yaw derivative (NinjaCar's frame).

- Output3: No other data could be found on what this variable was, so it should be the roll derivative (NinjaCar's frame).

NN outputs (world and NinjaCar frame)

New trainer:

After finding out the structure of the NeuralNetwork, a model was trained using this new structure. Although the errors that were obtrained were not low, the model that resulted from the training followed the laws of physics this time:

During different sessions of training, the best errors were obtained after a very careful preprocessing procedure. There was as lot

of noise in the data collected by the motion capture space.

After obtaining a working training algorithm, the procedure was improved by allowing the system to start training off of the weights

and biases provided in the Autorally repository. However, no testing was performed with this improvement, as my stay in the lab had already finished

by then.