Level: Big project (Bachelor's thesis)

GitHub Repository: https://github.com/JaumeAlbardaner/ninjacar_mppi

Grade: A (9.3 out of 10)

Publication: https://upcommons.upc.edu/handle/2117/374035

What is "MPPI"?

MPPI stands for Model Predictive Path Integral (control). It is an algorithm that is used to control the behaviour of a vehicle. The algorithm works by simulating how the vehicle would react to different inputs (predictive), and based off of the results, it calculates the control values that are transmitted onto the vehicle.

Why an MPPI Implementation?

During my stay at the Autonomous Robotics and Perception Group (ARPG) at the University of Colorado Boulder, I was requested to find algorithms that could be compared to CarPlanner. After surveying the state of the art I implemented one of them. In this case it happened to be MPPI because another labmate had implemented it in the past. Thereafter it was known to be possible.

The Autorally platform

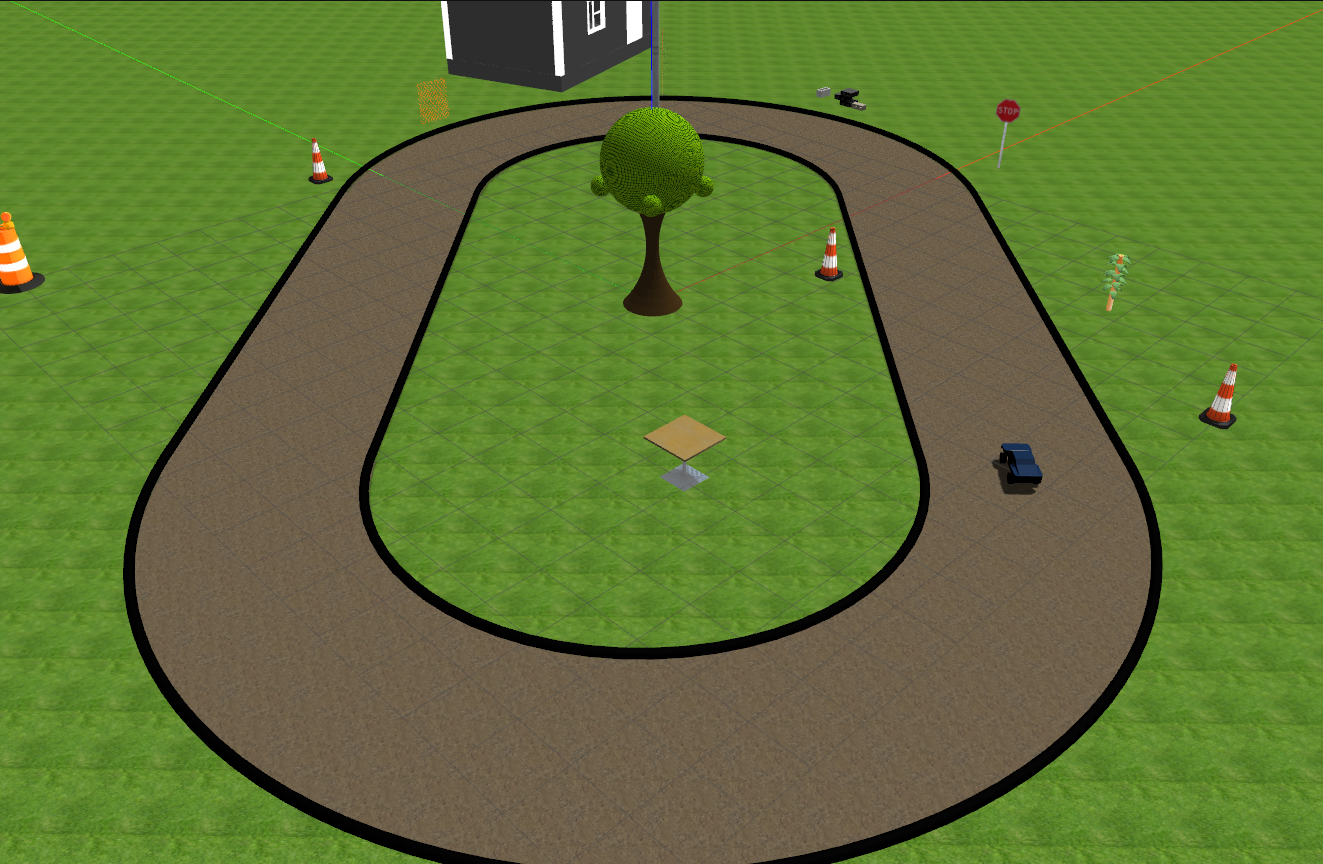

The MPPI algorithm was developed by GeorgiaTech. Not only did they publish the controller with instructions on how to use it, but they also accompanied it with a Gazebo simulation where one could visualize how the controller controlled a car.

Nevertheless, the platform in which I would be implementing the algorithm was not very rich on resources, so no resources should be wasted on the Gazebo simulation. Thus, the algorithm needed to be separated from the simulator before being implemented onto the NinjaCar.

The implementation

After successfully separating the algorithm from the original framework and implementing it onto the NinjaCar,

I was left with the contents of the ninjacar_mppi repository.

So far the MPPI algorithm worked inside of the vehicle, as I could still observe how the algorithm controlled a simulated car:

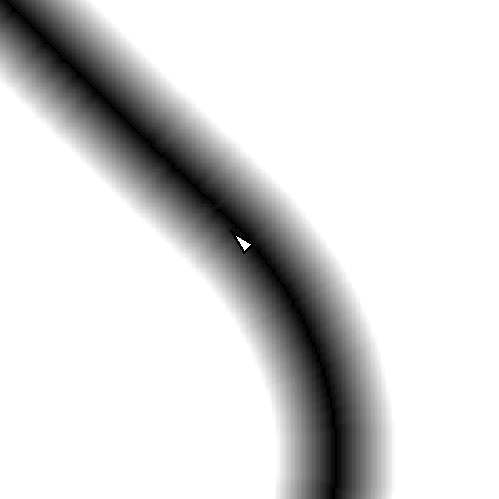

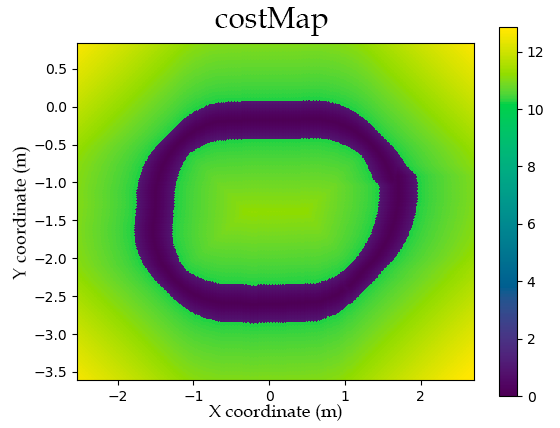

Map building

In order to customize the experience and have the car run on tracks that we could set up ourselves, I created a series of scripts that

would record the position of the car in order to generate a track.

As I wanted the maps to be as similar to the ones provided by the platform as possible, I had to deduct how they had been coded by observing

them.

After some time observing I obtained a similar map:

In order to generate the map I followed this procedure:

- Record a trajectory (onto a txt file).

- Set a matrix (the map) to all zeroes.

- Draw the recorded trajectory.

- Perform dilation (Computer Vision technique) to compute the distance of every point of the map to the trajectory.

- Those points close to the trajectory have their distance normalized (so the track has ones on each side and a 0 in the middle).

- The rest of the points have 10 added to their distance.

Model generation

The mppi controller could rely on either base functions or a neural network to represent the dynamic model of the vehicle.

The procedure to get the Neural Network to work has been explained in depth in

this

page.

However, to get the controller to work with base functions I had to dig very deep inside the algorithm. I ended up finding that there was something

about an interpolation mechanism (Differential Dynamic Programming, DDP)

that broke the controller. This turned out to be "fixable" by just disabling use_feedback_gains on the launch file.

Final demo

Although I finished debugging the NeuralNet trainer after my stay in the lab, I could still manage to get MPPI to control the NinjaCar: