Level: Big project (Bachelor's thesis)

GitHub Repository: Privated

Grade: A (9.5 out of 10)

Publication: https://upcommons.upc.edu/handle/2117/354403

What is an "Active vision system for searching detecting and localizing objects in a real assistive apartment"?

As the name of the project implies, this system has to detect certain objects, and if one is detected, it is able to deduct its location within the assistive apartment. An "assistive" apartment is like any other apartment, with the difference that it has been prepared for people who may have a harder time performing everyday tasks. Whatever the reason may be.

The apartment

The project has been developed in the Perception and Manipulation lab, at the Institut de Robòtica i Informàtica Industrial.

This lab includes a simulation of an apartment:

The project

This project was separated in three parts, the first two being the main scope of the project and the last one being a later addition:

- Object detection

- Efficient search

- Robot commanding

Object detection

In order to be able to locate objects within the apartment, the system had to first be trained to recognize those objects. After surveying the state of the art in object detection, YOLOv4 was chosen as the algorithm to use. However, in order to use it a dataset with the images of the objects that had to be detected had to be obtained.

The dataset

The lab had part of the YCB object set available, which is why initially I set out to find if somebody had previously uploaded such a dataset.

Initially it seemed that somebody had done exactly that, as it can be seen in the following website:

https://okabe.dev/ycb-video-dataset-download-mirror/.

However, after training a model with the entire dataset, not only did it not detect the objects, it detected additional objects

where there were none.

Thereafter, I set out to generate my own dataset taking into consideration everything that has to be accounted for:

Pose change

Pose change

Lighting change

Lighting change

Occlusion

Occlusion

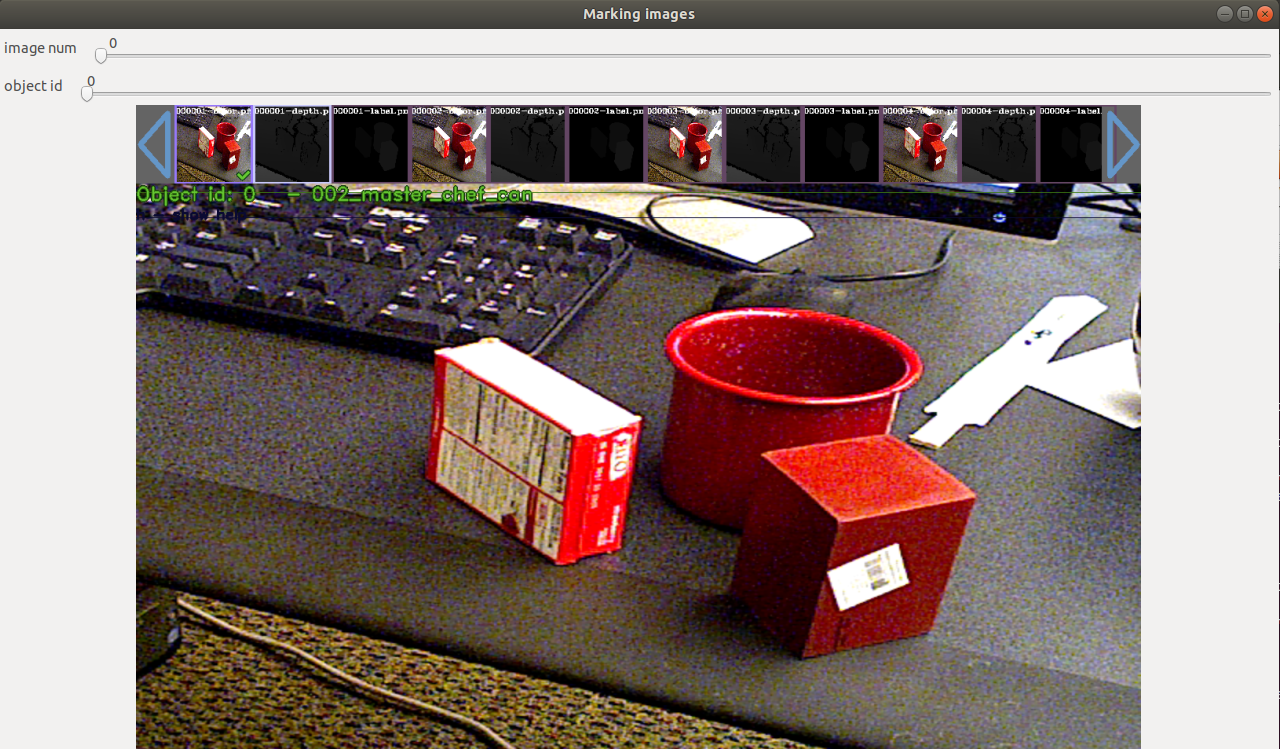

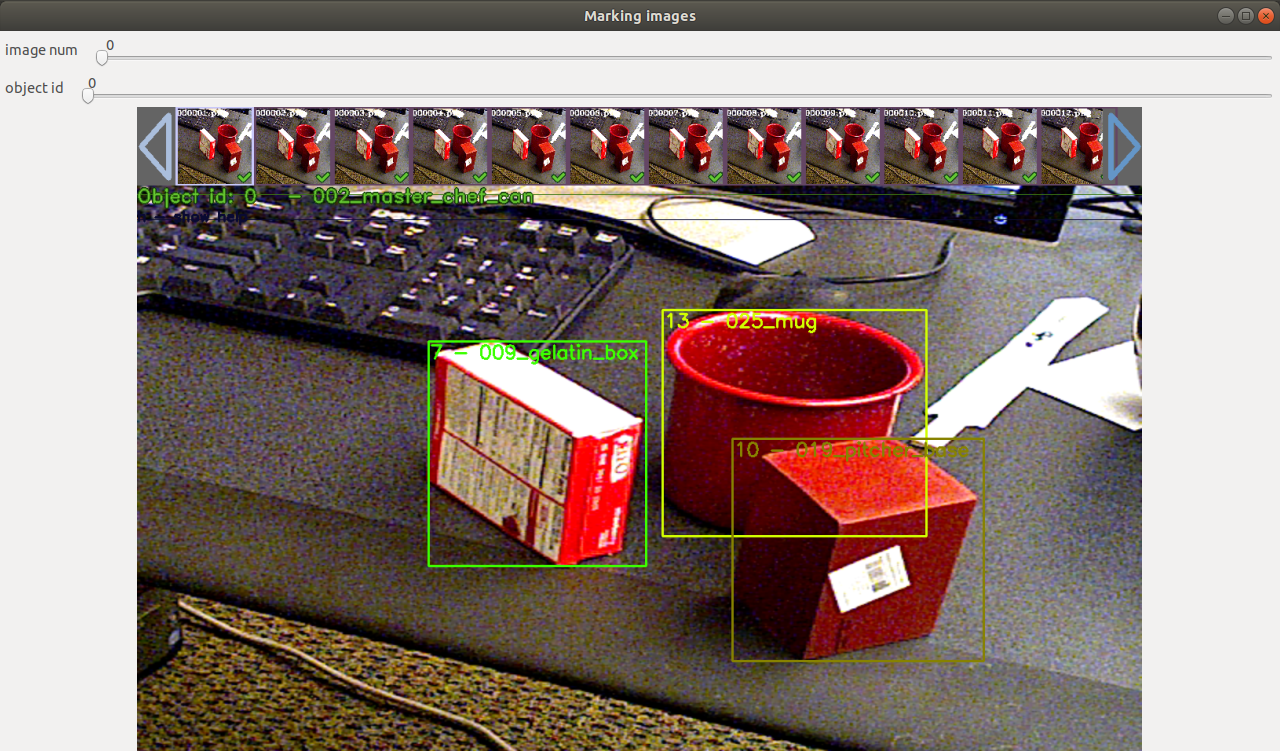

Afterwards, I relied on the tool provided in the YOLO repository to label the objects that appeared in eavery picture individually:

The camera

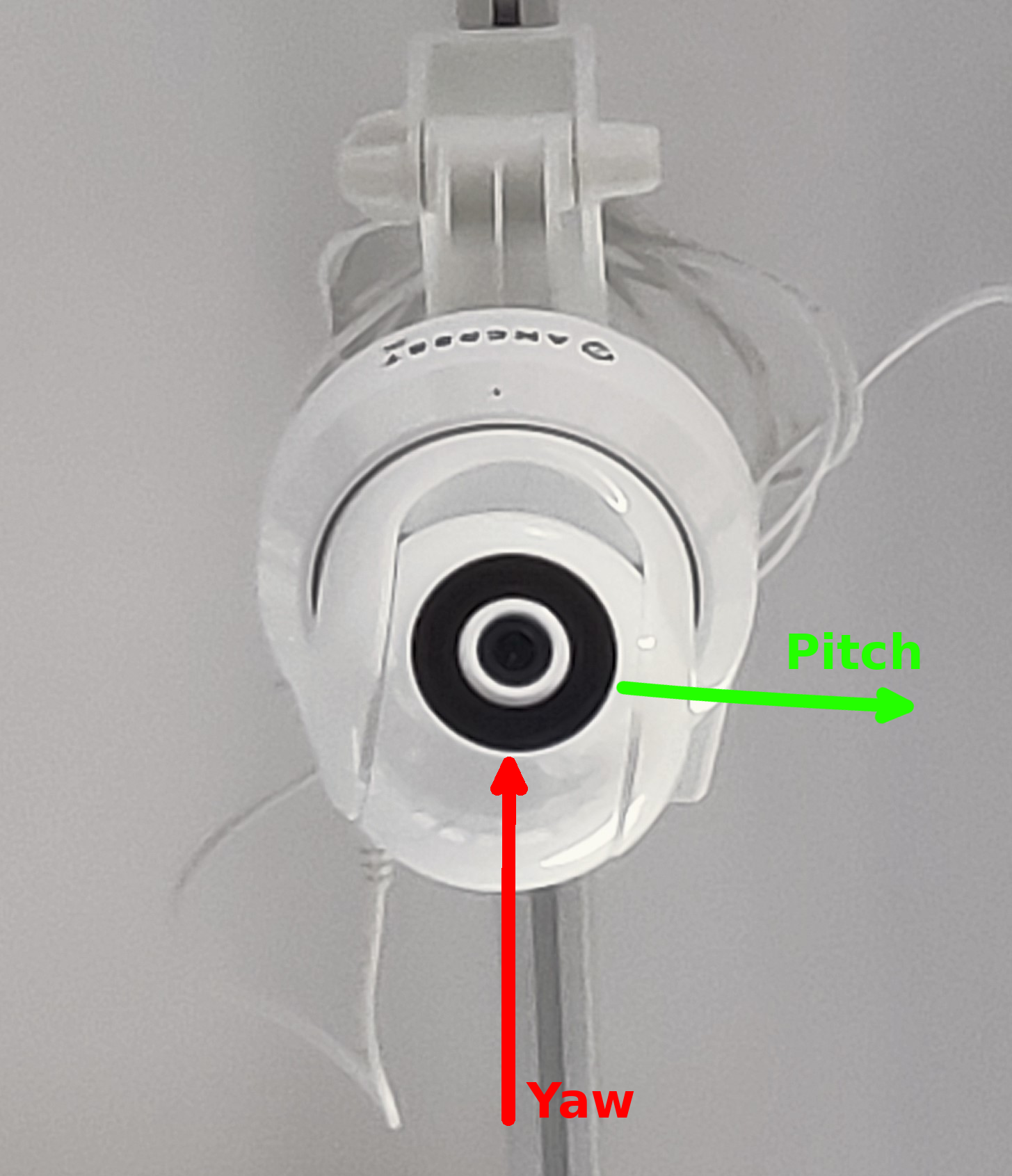

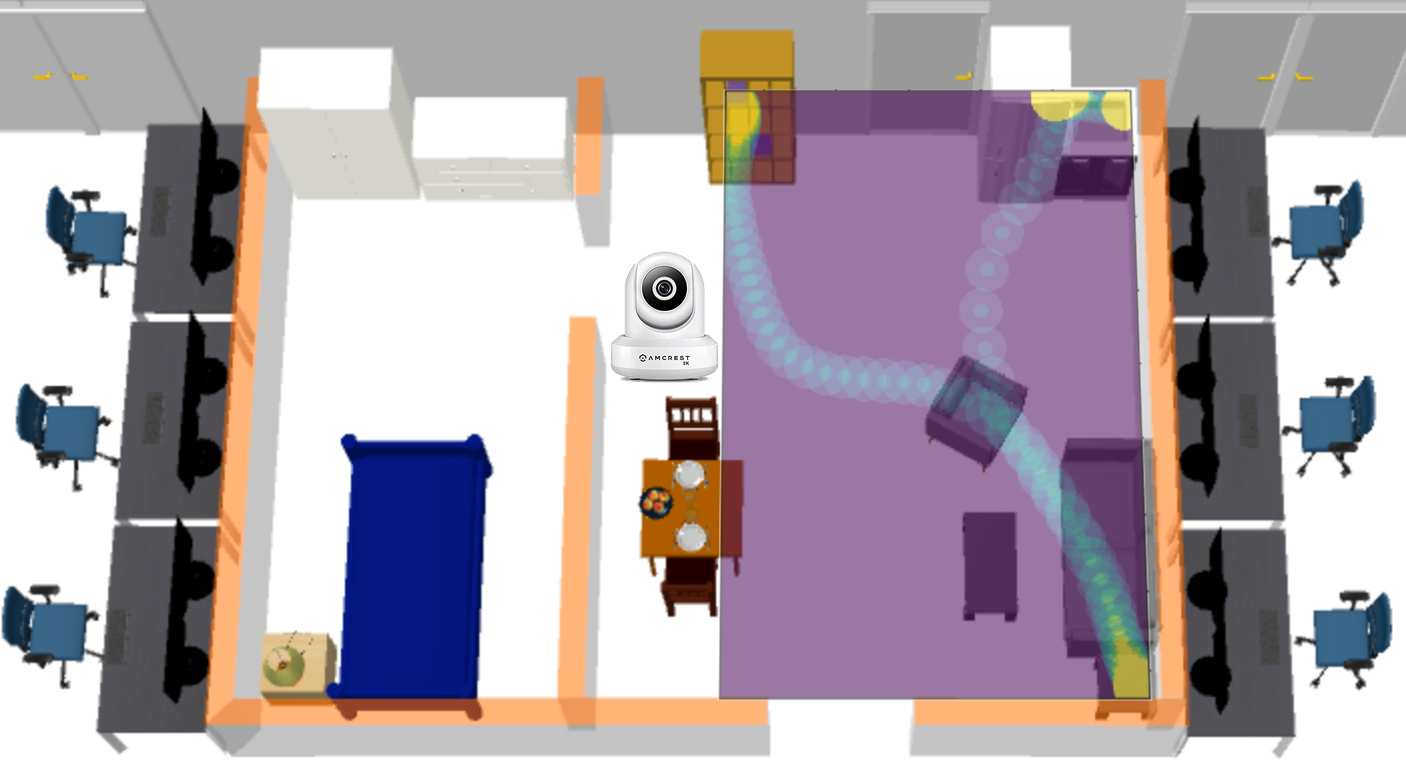

The camera that was used in this project was an Amcrest IP3M-941W-UK. It was located at the ceiling of the simulated apartment.

In order to give it commands, a Python library was used.

Thereafter most of the project was developed in this language.

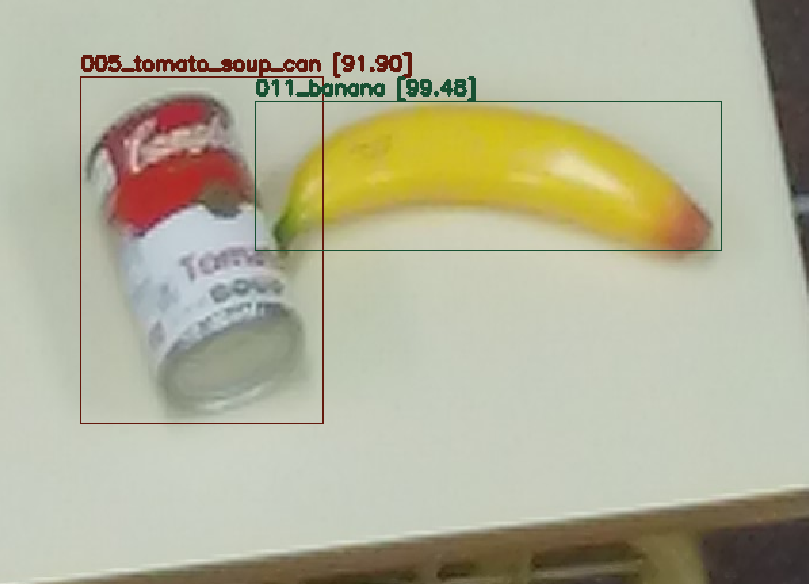

Although the dataset was obtained from a different camera, the resulting model was still capable of finding the trained objects with a very high precision:

Efficient search

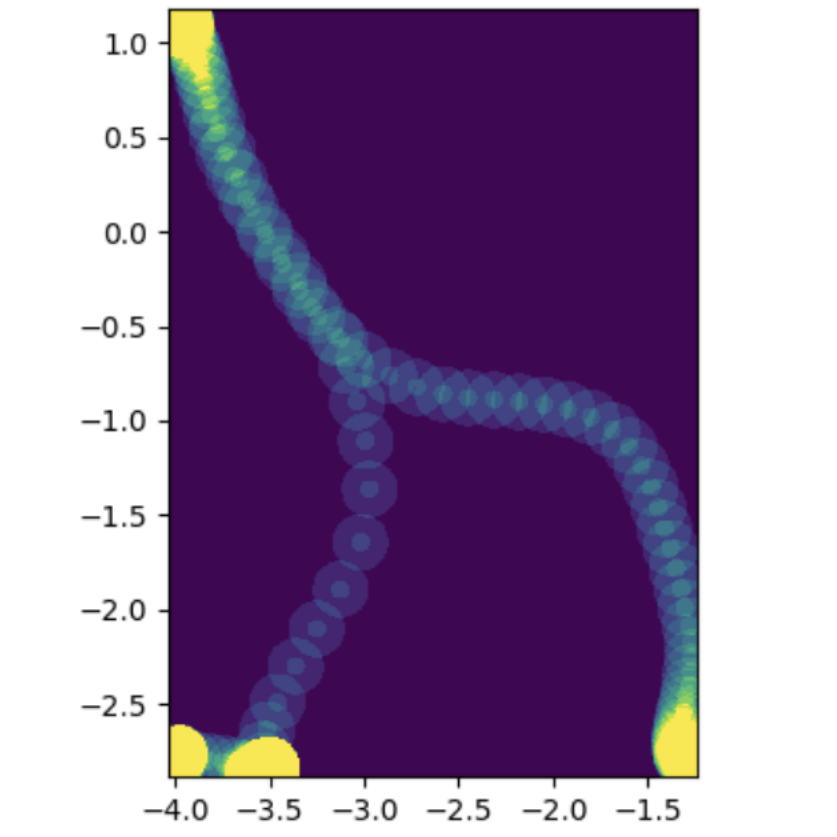

Although the camera was now capable of detecting whether there was an object in its scope or not, it had to find the object as fast as possible. In order to do this, an object was tracked throughout the day inside of the apartment. This yielded a heatmap that described the likelihood of finding the object in each position depending on the time of day:

And if this probabilistic map was projected onto the apartment, each possible site could be discretized into a location. In this example, the object could be in three different locations: the bookshelf, the sink or the table:

In order to look through the different locations to find the object as fast as possible, three models were considered:

- Sorting by %: This model checks each preset in a decreasing probability fashion.

- Current configuration’s best: This model takes into account the current position of the camera and moves towards the one that presents the highest probability × proximity value.

- Brute force: This model takes into account the current position of the camera and recursively checks every possible path the camera can take. It then selects the one that presents the highest score. The value each path has consists of the sum of scores from each time the camera must move to a new configuration.

With those three models, four tests were conducted:

- T1:Last set preset: We tested this option because in the way we have implemented the configuration of the camera’s presets, the camera remains in the last position set. It also turns out to be the one with the least probability of housing the object.

- T2: Highest probability preset: After testing the first option, we wanted to check if moving the camera to the preset with the highest probability made a difference.

- T3: Random location: This test was made in order to test if implementing an algorithm that left the camera looking in a random position affected performance.

- T4: Random location with delay: Every time we move the camera from one location to another, there is a delay between the moment when the camera stops moving and when the image stops moving. We implemented a wait every time the camera stopped moving, and checked if there were noticeable different results. This test highly penalizes methods that stop on the most places without finding the object.

Robot commanding

After finding out the object in the most efficient manner, it was a good idea to have the TIAGo mobile manipulator move towards that location.

This would allow it to interact with the object. As an example, the system could look for some pills and have the robot bring them to the

patient.

ROS

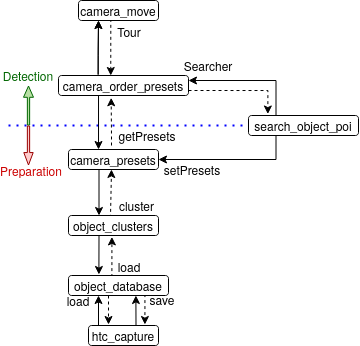

The system was implemented in the lab's ROS framework, where it was capable of communicating with the TIAGo. It was split into the following nodes:

- htc_capture: Records the position of an HTC tracker or controller (simulating an object).

- object_database: Serves as a storage for all previously saved detections.

- object_clusters: Generates clusters from the data stored in the database, which represent where the object usually is.

- camera_presets: Prepares the camera to look at the usual locations of a given object.

- search_object_poi: Asks the system to prepare an object for searching, or searches it. It can also send a TIAGo manipulator robot to a location.

- camera_order_presets: Given the camera’s configuration, it computes the order in which locations have to be visited.

- camera_move: Moves the camera to a desired location and runs the object detecting algorithm to check for the presence of a desired object.

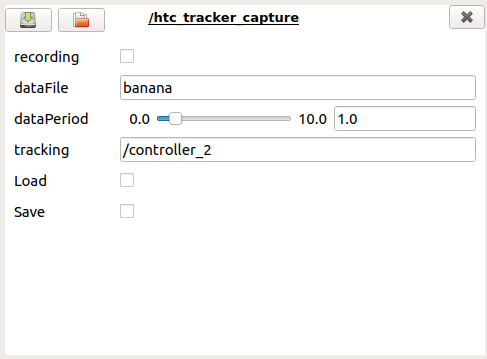

GUI: object tracker

In order to generate the heatmaps, a GUI that relied on ROS's dynamic_reconfigure package was developed:

- recording: boolean that can easily toggle recording on/off.

- dataFile: name of the object we want to operate with.

- dataPeriod: establishes the frequency with which we want to save the data. Represents the amount of data recordings per second we want.

- tracking: here we must introduce the name of the tracker we want to store the data from.

- Load: makes a request to the database for the data corresponding to the object with the name specified in the "dataFile" variable.

- Save: sends the information recorded locally to the database, and requests that it be saved.

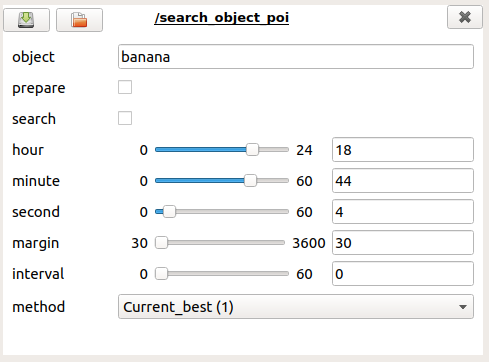

GUI: object locator

In order to locate an object, a GUI that relied on ROS's dynamic_reconfigure package was developed:

- object: name of the object we want to search or prepare the system to search.

- prepare: boolean that acts as a button. When pressed it will only stop being pressed after the attempt at preparing the system for the object has been completed.

- search: boolean that acts as a button. When pressed it will only stop being pressed after the attempt at finding the object has been completed.

- hour: positive integer that represents the hour in which we want to center the search for the object.

- minute: positive integer that represents the minute in which we want to center the search for the object.

- second: positive integer that represents the second in which we want to center the search for the object.

- margin: positive integer that helps establish the amount of data we want to process for the time-dependant clustering algorithm. We take all the data that is within "Center ± margin" seconds.

- interval: positive integer that marks how many seconds pass between each log of data we take into account when calling the clustering algorithm.

- method: integer with three different values (0-2) for what method must be used to search (Descibed under "Efficient search")

Final demo

After implementing all this, here is a demo of how the system behaves when asked to look for an apple or a can of Pringles: